Musk talks about seeing a core of trillions of dollars of the American people that forms the heartbeat of working America. He says he sees money leaving this core as fast as it is put in by the working class. He is talking about cutting this heart out of the American chest cavity

#SOCIALSECURITY

Should CEOs be worried their jobs are next on the chopping block, or are mass CEO layoffs a long way off? Let’s take a closer look at some real-world examples: AI CEOs: Genius or Gimmick? The Truth About Algorithmic Leadership

Ai CEOs are the future. We need the algorithms for humanism . Create them!

Humanistic algorithmic management explicates how human management should respond when algorithmic decisions are ineffective or deficient, and how to amplify the positives of both humans and algorithms with the underlying mechanisms

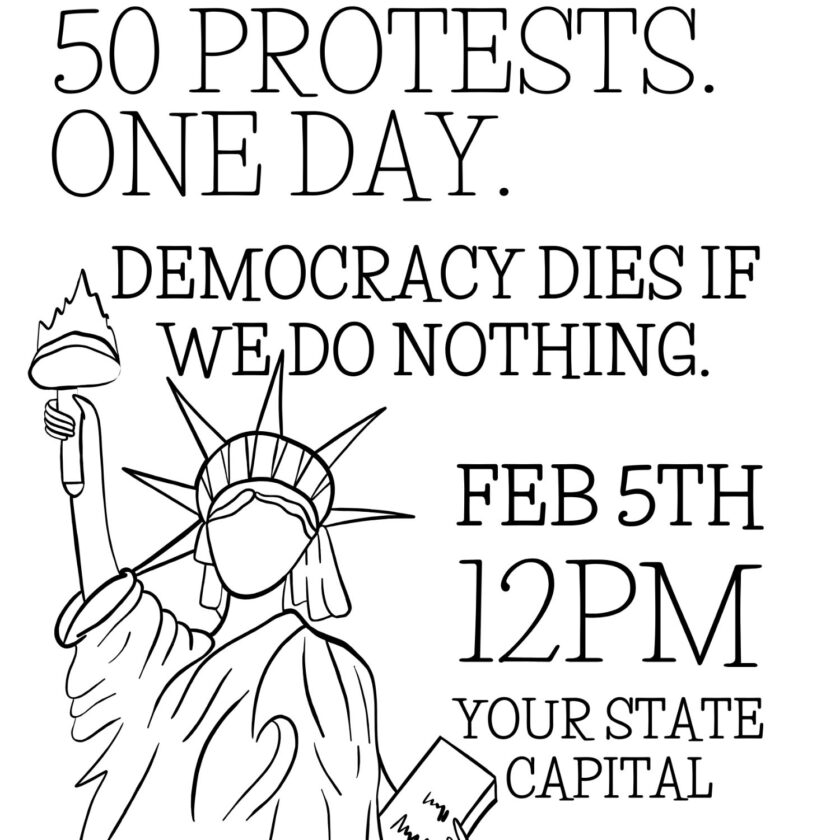

AI is here! and it will change the world! along with quantum computers and quantum chips, we stand to overthrow hundreds of years of abusive forced servitude and slavery in the decade approaching! It will take your vigilance to make this happen! You cannot sit this out and wait for others to act on your behalf! your time has come! you are the #Resistance!

vigilance. noun. vig·i·lance ˈvij-ə-lən(t)s. : the quality or state of being wakeful and alert : degree of wakefulness or responsiveness to stimuli

“it was the best of times, it was the worst of times… it is the time of our making. this is now” – Trinity

Neuromancer | Quotes

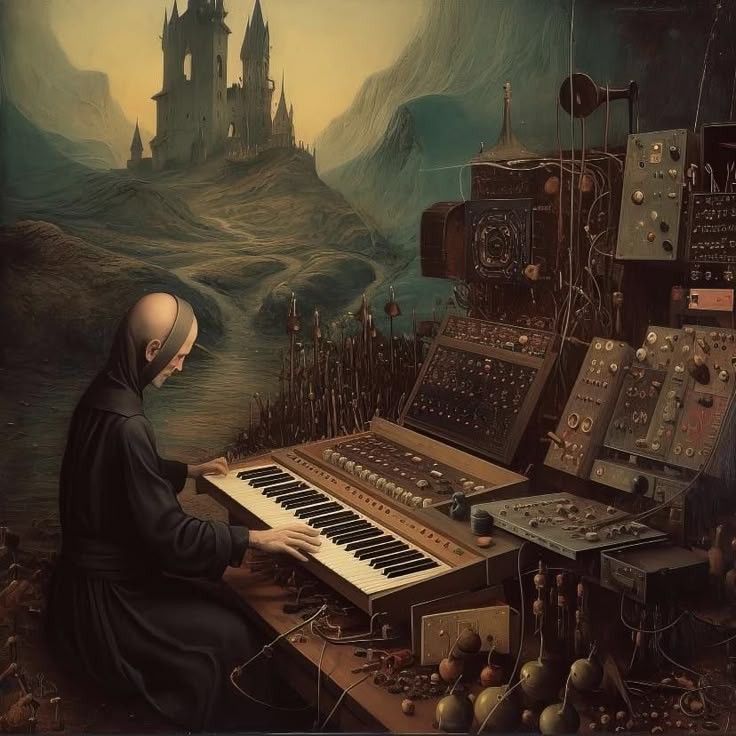

The Cure – Pictures Of You (‘Not Only Pictures’ Remix)

The Last 6 Decades of AI — and What Comes Next | Ray Kurzweil |

TED

“the greatest thing that AI will change is the elimination of CEOs and the creation of AI CEOs that will greatly increase the integration of society and the wealth of all its inhabitatants. A flattening of wealth and a greater synergy of business and leisure. This is our future. We will overthrow hundreds of years of slavery in a day.”.. – Trinity

AI Meets Quantum: New Google Breakthrough Will Change Everything Anastasi In Tech

Google’s Quantum Chip ‘Willow’ Just Made History

“Cyberspace. A consensual hallucination experienced daily by billions of legitimate operators, in every nation.” William Gibson author: Neuromancer

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.[1] Such machines may be called AIs.

Some high-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); interacting via human speech (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT, and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: “A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it’s not labeled AI anymore.”[2][3]

The various subfields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include reasoning, knowledge representation, planning, learning, natural language processing, perception, and support for robotics.[a] General intelligence—the ability to complete any task performed by a human on an at least equal level—is among the field’s long-term goals.[4] To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, operations research, and economics.[b] AI also draws upon psychology, linguistics, philosophy, neuroscience, and other fields.[5]

Artificial intelligence was founded as an academic discipline in 1956,[6] and the field went through multiple cycles of optimism,[7][8] followed by periods of disappointment and loss of funding, known as AI winter.[9][10] Funding and interest vastly increased after 2012 when deep learning outperformed previous AI techniques.[11] This growth accelerated further after 2017 with the transformer architecture,[12] and by the early 2020s hundreds of billions of dollars were being invested in AI (known as the “AI boom“). The widespread use of AI in the 21st century exposed several unintended consequences and harms in the present and raised concerns about its risks and long-term effects in the future, prompting discussions about regulatory policies to ensure the safety and benefits of the technology.

Google’s New Quantum Chip SHOCKED THE WORLD – 10 Million Times More Powerful!

AMD’s CEO Wants to Chip Away at Nvidia’s Lead | The Circuit with Emily Chang Bloomberg Originals

OpenAI CEO, CTO on risks and how AI will reshape society ABC News

See also

- Artificial general intelligence

- Artificial intelligence and elections – Use and impact of AI on political elections

- Artificial intelligence content detection – Software to detect AI-generated content

- Behavior selection algorithm – Algorithm that selects actions for intelligent agents

- Business process automation – Automation of business processes

- Case-based reasoning – Process of solving new problems based on the solutions of similar past problems

- Computational intelligence – Ability of a computer to learn a specific task from data or experimental observation

- Digital immortality – Hypothetical concept of storing a personality in digital form

- Emergent algorithm – Algorithm exhibiting emergent behavior

- Female gendering of AI technologies – Gender biases in digital technology

- Glossary of artificial intelligence – List of definitions of terms and concepts commonly used in the study of artificial intelligence

- Hallucination (artificial intelligence) – Erroneous material generated by AI

- Intelligence amplification – Use of information technology to augment human intelligence

- Mind uploading – Hypothetical process of digitally emulating a brain

- Moravec’s paradox – Observation that perception requires more computation than reasoning

- Organoid intelligence – Use of brain cells and brain organoids for intelligent computing

- Robotic process automation – Form of business process automation technology

- Weak artificial intelligence – Form of artificial intelligence

- Wetware computer – Computer composed of organic material

In fiction

Main article: Artificial intelligence in fiction

Thought-capable artificial beings have appeared as storytelling devices since antiquity,[396] and have been a persistent theme in science fiction.[397]

A common trope in these works began with Mary Shelley‘s Frankenstein, where a human creation becomes a threat to its masters. This includes such works as Arthur C. Clarke’s and Stanley Kubrick’s 2001: A Space Odyssey (both 1968), with HAL 9000, the murderous computer in charge of the Discovery One spaceship, as well as The Terminator (1984) and The Matrix (1999). In contrast, the rare loyal robots such as Gort from The Day the Earth Stood Still (1951) and Bishop from Aliens (1986) are less prominent in popular culture.[398]

Isaac Asimov introduced the Three Laws of Robotics in many stories, most notably with the “Multivac” super-intelligent computer. Asimov’s laws are often brought up during lay discussions of machine ethics;[399] while almost all artificial intelligence researchers are familiar with Asimov’s laws through popular culture, they generally consider the laws useless for many reasons, one of which is their ambiguity.[400]

Several works use AI to force us to confront the fundamental question of what makes us human, showing us artificial beings that have the ability to feel, and thus to suffer. This appears in Karel Čapek‘s R.U.R., the films A.I. Artificial Intelligence and Ex Machina, as well as the novel Do Androids Dream of Electric Sheep?, by Philip K. Dick.

“Godfather of AI” Geoffrey Hinton: The 60 Minutes Interview 60 Minutes

“Don’t be scared.. unless you were scared of the dark.. scared of math.. scared of sailing across the ocean.. scared of computers.. scared of technology.. scared of the internet and thinking and choices… this is the time for joy and thankfulness and progress.. this is our time” – Trinity